Moto 360 Review! 2 Months with an Android Wear Smartwatch

Inbox by Gmail First Impressions & Invitations!

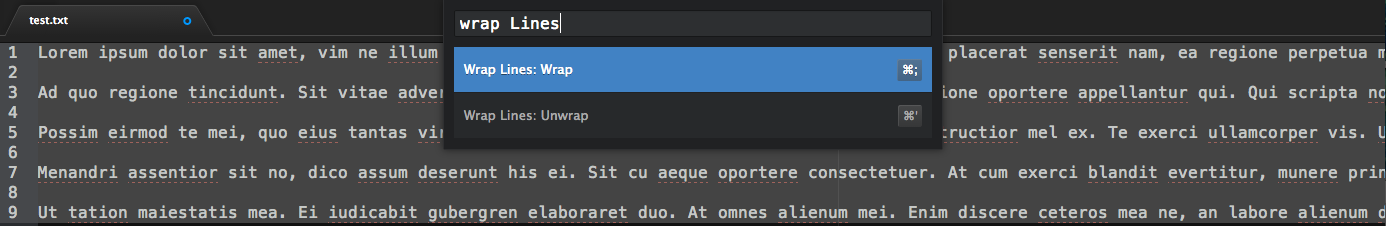

Atom package for wrapping lines

Even though I have always seemed to fall back to Vim in a terminal for my coding, I have been using Atom (with SSHFS) for some time now and for reasons I don’t quite understand yet, I’ve not really felt the urge to stop. My initial impressions were that Atom is a Sublime knock-off with some more flexibility, so I expected after a short time of playing with my invite, to go back to my tried and true Vim since that’s what I did with Sublime. But here I am, and I’m more convinced to stick with it some more now that it has been open sourced. The ‘gq’ wrap lines feature was one I sorely missed, so I thought I’d try to script it.

I was surprised at how easy and quick it was to code a package for it, and to subsequently make available to everyone else through their package management system (the image above takes you to the package). On a slightly related note, my friend Rob pointed me to this interesting Vim modernization project.

Lamport Stresses Thinking Before Coding

More specifically, he suggests some of the ‘thought’ before coding should be formalized as a specification (like a blueprint) written in the language of mathematics. The TLA specification language uses satisfiability of boolean formulas as a mathematical way to express initial states and the possibilities for a state to transition to other states. The framework provides the programmer a way to (1) think in depth about the possible states before coding and running test cases, (2) better document what an implemented piece of code does making it more convenient for updates later, and (3) automatically find bugs from logical inconsistencies.

This video provides more motivation and examples for what I said above, including a couple of anecdotes that mentioning the use of TLA by Amazon and Microsoft. Overall, I think he made a good argument that putting more effort in the specification can lead to better design decisions down the line.

A couple of notes:

(1) This approach is mostly about ‘functional’ specification, so it provides little assistance in implementing such a specification. In this way, it is largely independent of programming language choice/design, compilation, and optimization in practice. Many specific algorithms could satisfy a functional specification. In his talk, I got the impression that these other issues were trivial in comparison to specification, which I don’t think is often the case. However, I certainly agree that more thought should be spent at the specification stage, if possible.

(2) Large-scale adoption of this formalism seems like it would be difficult. There is the obvious overhead in teaching people to understand and implement this formalism in their work. Even if knowledge of TLA was part of a programmer’s toolkit, this type of formalism may be far more beneficial for specific, more ‘predictable’ problems. By predictable, I mean that a programmer (or computer) can consider the possibilities of desirable and undesirable states a priori. For example, the task “write a program to produce a pleasing sound” is not only a larger/more general problem, but it also very difficult to specify it exactly. This is an extreme example, but less extreme versions of it are a very common and practical form of programming (e.g. programs that are highly dependent on human satisfaction during use). For these applications, past intuition, seeing how you, a test group of people, and even your customers respond to your program may provide the most valuable information for improvement.

Leslie Lamport is a computer scientist working at Microsoft Research. He recently won the Turing Award (one of the highest honors in computer science), and ever since I learned about Lamport clocks, he has been one of my favorite CS academics.

Consider the Nimrod Programming Language

Mid-April Update: Thanks for the thoughts everyone! The post quickly grew to >10,000 views and reached #10 on Hacker News for a while. I continue to enjoy the language for a multitude of applications from basic scripting to ‘hard work’ tasks.

I love to play around with new computer programming languages. Even though I spend most of my time in industry tested standards for their respective applications (e.g. Java, C, C++, Python, Javascript, …), I think there are a lot of good reasons–especially these days–to learn and experiment with new languages. The impact of modern language development isn’t limited to a cottage industry of computer scientists and programmers. Take the growing Scala language as an example. Twitter transitioned from a framework primarily using Ruby to Scala to scale their service and to maintain a programming model they desired. I also believe we are finally beginning to see languages that are elegant, expressive, and, importantly, fast. For example, these days, a company using two industry standards like Python and C++ might do ‘heavy lifting’ in C++ and write a lot of ‘high level’ wrapper code and scripts in a language like Python. Why not use just one language? Why is Python ‘slow’ for some tasks and C++ ‘unpleasant’ for scripting tasks? A good language should be expressive enough to elegantly express domain-specific tasks while allowing the programmer to make the things that need to be fast, fast.

Why the competition may not quite fit the bill

I could just list out great Nimrod features and say: ‘consider it’, but I don’t think that these features are very useful without some explanation of why these features provide an overall better experience than other compelling languages. When it comes to picking a programming language that attempts a speed-elegance unification, there are a lot of choices. The five on the ‘short list’ that I discuss in this post are:

There are other options that I could put on this list like Haskell or Go, and I have my reasons for picking the 5 above, but I don’t want to discuss them right now. What I would like to do is convince you that Nimrod is a particularly nice language to consider since the design decisions they made, to me, result in an elegant, expressive, and fast language (though I understand people have different syntactic preferences). These are my initial thoughts after nearly three weeks of coding a lot in Nimrod. I am writing this because I think the language needs to get more attention than it has, and it deserves to be taken seriously as a worthy competitor to the other four mentioned above.

Of course this is not a super-detailed comparison, but overall, I hope I provide some reasons you may want to consider Nimrod over these other very nice languages. They all have stuff to offer over Nimrod and vice-versa. And there are also a number of overlapping features. Ideally I would like to have a highly expressive, fast language that is what I call “K&R-memorable” which basically means that it approximately as easy to understand it as it is to understand C (all you do is read K&R and you’re good).

C++11 has really brought C++ a long way. Coding with it results in a lot less boiler-plate code and it did a reasonable job of incorporating higher-order functions and handy value-semantic preserving features such as move semantics. However, there’s still a lot of boiler plate (e.g. it’s 2014 and I’m still writing header files separate from source because compilation time with header-only files is too slow?), and now I need to implement more operators for classes to preserve value semantics (don’t forget to implement the move assignment operator!). So C++11 is nice and incorporates some modern features, esp. because it works with all other C code, but it’s much too complex, and I think, far less elegant than the other alternatives.

Scala and Rust are both very interesting languages (in general, simpler to understand than the totality of C++11). I have had a good deal of experience with Scala and have played with Rust for a couple of minor tasks. Both languages implement traits. To me, traits are a far more elegant way of adding similar functionality to different objects when compared with multiple inheritance. But my experience with Scala has shown me that while it is easy to use libraries, it is harder to design them in the midst of a complex graph of how objects and traits are related to one another. I spent a lot of time engineering the types to be just right, which is great, but it was also frustrating and I felt that the safety I desire at compile time would be more easily achieved without such a complex system. I will discuss some design decisions made by Nimrod below that I think result in less time spent on type nitpicking and more time spent on getting the ‘job done right’ with reasonable safety features. Rust provides more built-in memory safety, which is great, but understanding how it all works and the ‘conversations’ with the compiler after coding can be frustrating. Believe it or not, sometimes hard guarantees with types/traits and memory are not worth the pain when programming (i.e. the programmer effort required, mental model and syntactic complexity). I think this is precisely why the adoption of a slow dyamically duck-typed language like Python has been so successful. They’re ‘easy’ to program in. I think Nimrod is a happier medium.

Julia’s motivation comes from two places. The language resembles typical scientific programming syntax (ala Matlab and Pylab) that executes fast when compiled, and offers extensive and intuitive metaprogramming capabilities since it is homoiconic like Lisp. (And the scientist in me really likes the IJulia notebook feature that they have apparently worked quickly to develop.) I will show some examples below on how Nimrod offers a powerful and elegant metaprogramming environment without necessarily being homoiconic. My only real concern with Julia is lower-level systems programming. Forced garbage collection can be a game-changer here, and I’m not sure I think its choice of being ‘largely’ dynamically typed is a good one in this setting either. Providing a library developer some level of type annotation and type class restriction can be useful for engineering purposes and more helpful when dealing with compile-time errors. I work in the area of computational biology and I am left wondering: is Julia the right language to build the fastest read aligners, gene expression estimators, etc.? These tools are often written in C/C++, so Julia code would have to beat that! A similar sentiment applies to Scala: it’s dependence on the JVM has actually resulted in very poor performance in even a simple multicore application, in my experience.

Quick start with Nimrod

OK, so you should read the tutorial and eventually the manual on the web site to get a quick start and get to know the language better, but I’ll tell you how I started using it: as a scripting language. I know this isn’t the best for ‘performance’ testing, but any language that has this ‘unification’ quality should be equally good at scripting as it is for high-performance applications. Here is a simple example:

import os

proc shell(cmd: string) =

if os.execShellCmd(cmd) != 0:

raise newException(EOS, cmd & "returned non-zero error code")

proc fexists(fname: string) : bool =

try: discard Open(fname)

except EIO: return false

return true

const fromScratch = false

shell "clear"

if fromScratch:

echo "Removing cached files and log"

shell "rm -rf nimcache log.txt"

echo "All output in log.txt"

echo "Compiling ..."

shell "g++ -fPIC -O3 -shared -std=c++11 bla.so bla.cpp 2>&1 >> log.txt"

# More of the pipeline, e.g.

if not fexists("blah.txt"): createBlah()

else: useBlah()

This, to me is a very clean way to do basic shell scripting while having the power of a full programming language.

Nimrod avoids ‘over-objectifying’

OK, so that was relatively straightforward. Here is a simple example of how to create a matrix type (partially inspired from a stackoverflow post):

type Matrix[T] = object

nrows, ncols: int

data: seq[T]

proc index(A: Matrix, r,c: int): int {.inline.} =

if r<0 or r>A.nrows-1 or c<0 or c>A.ncols-1:

raise newException(EInvalidIndex, "matrix index out of range")

result = r*A.ncols+c

proc alloc(A: var Matrix, nrows,ncols: int) {.inline.} =

## Allocate space for a m x n matrix

A.nrows = nrows

A.ncols = ncols

newSeq(A.data, nrows*ncols)

proc `[]`(A: Matrix, r,c: int): Matrix.T =

## Return the element at A[r,c]

result = A.data[A.index(r,c)]

proc `[]=`(A: var Matrix, r,c: int, val: Matrix.T) =

## Sets A[r,c] to val

A.data[A.index(r,c)] = val

iterator elements(A: Matrix): tuple[i:int, j:int, x:Matrix.T] =

## Iterates through matrix elements row-wise

for i in 0 .. <A.nrows:

for j in 0 .. <A.ncols:

yield (i,j,A[i,j])

proc `$`(A: Matrix) : string =

## String representation of matrix

result = ""

for i in 0 .. <A.nrows:

for j in 0 .. <A.ncols:

result.add($A[i,j] & " ")

result.add("\n")

The first thing to notice is that a matrix is an object type that contains data and its number of rows and columns. All the methods take a matrix as the first argument. This matrix is generic on any type Matrix.T. An alternative syntax where ‘[T]’ comes after a procedure name may also be used. Nimrod uses a uniform call syntax that implies these two calls are equivalent:

A.alloc(nr,nc): ... alloc(A,nr,nc)

Notice that ‘elements’ is an iterator. This is a very efficient iterator called an ‘inline’ iterator. You can read more about this in the tutorial and manual. The `$` operator before a variable is the standard ‘to string’ operator. This allows you to do:

echo A

and a matrix will be printed out.

The uniform call syntax is a simple way to support a lot of ‘call-chaining’ like behavior commonly seen in object-functional programming and avoids forcing methods to be in objects. As an example, say I have a BigInt, and a little int and I want to be able to support addition of them. In Nimrod, you simply write a procedure that overloads the ‘+’ operator and it works (otherwise you get a compile time error). In a language like Scala, you define what’s called an ‘implicit conversion’ to do this for you. The added idea of an implicit conversion on objects and having to define them so explicitly seems more complex than just overloading the operator. Note that there are other cases where you would like to use implicit conversions and Nimrod provides this capability. Calls to procedures in Nimrod can be ‘pass by reference’ in C++ world:

proc test(x:var int) = x=5 var x = 3 echo x test(x) echo x

results in:

3 5

The compiler will chose the appropriate method at compile time to call based on the types in the procedure. Nimrod also supports multiple dispatch.

Nimrod has an intuitive type system

As mentioned above, traits are a nice way of defining components of functionality tied to an object and the compiler will error out if certain traits are required, but missing, for example. I also mentioned that this can lead to complexities in library design and engineering (which may be good or bad depending on your perspective and the outcome).

One feature of Nimrod that’s appealing is that it offers the programmer type classes — the ability to group types into a single type (e.g. define float and int to be of type number), and distinct types — the ability to create two different types corresponding to the same underlying data type (e.g. dollars and euros are both ints in their tutorial example). Similar to type classes, Nimrod also allows constraints on generic types, and support for additional constraints is in the works. So the compiler will provide an error message if a method is not defined for a particular class of types its defined on or if a desired method is missing. Traits appear to be a formalism that could be useful, but might result in a lot of added complexity given the capabilities already provided by type classes and distinct types. Nimrod also supports an effects system which allows for additional compile-time safety checks.

You will want to metaprogram in Nimrod

Nimrod makes it easy to extend the language and the abstract syntax tree to generate the code you want. Say I wanted to do an openMP-like parallel for using Nimrod’s threads over a shared sequence of data. The thread code looks a lot like this:

template parallelFor[T](data:openarray[T], i:expr, numProcs:int, body : stmt) : stmt {.immediate.} =

let numOpsPerThread = (data.len/numProcs).toInt

proc fe(j:int) {.thread.} =

for q in 0 .. numOpsPerThread-1:

let i = j*numOpsPerThread+q

body # so something with data[i]

var thr: array[0..numProcs-1, TThread[int]]

for j in 0 .. numProcs-1:

createThread(thr[j], fe, j)

joinThreads(thr)

But using the template as defined above, I can just do:

parallelFor(sequence, i, numProcs):

# do something with sequence[i]

Note: this is just a toy example showing how to do some handy metaprogramming using a lower level version of threads. The developers are working on a much better way of handling threads and parallelism at a higher level. The tutorial defines a debug statement (a variation of it which I use a lot) that shows how you can actually modify the abstract syntax tree.

C code and Memory Allocation

The typical compilation scheme in Nimrod I use is to compile to C code. They introduce a nimcache/ directory with all the C and object code for me to inspect to see how efficient it is compared to what I would write in C. It’s fairly straightforward to link into C code if you must.

The C code Nimrod generates often appears indistinguishable in speed when compared to hand-crafted C code I made in certain examples. Nimrod is much more pleasurable to program in than C, and the compile-time and run-time error messages are far better than C.

Also, I’d like to note that Nimrod allows for manually allocated memory and low-level operations to provide the developer ‘C-like’ control. In most cases the standard libraries using the GC are appropriate, but in some cases you may want to manage your own data on the heap and Nimrod allows for this.

Young language, helpful community

The Nimrod language is young and has a handful of developers working on making it to a 1.0 release. The Nimrod community has been very helpful to me and I think it has a lot of potential.

I’m writing this post based on my experiences so far. I would really appreciate any feedback if I’m wrong or misrepresented a language I discussed. The post will be modified accordingly with acknowledgement.

Thanks to Rob Patro and the Nimrod community for useful discussions.

Getting Closer to a Star Trek Computer?

Reading this recent Tech Crunch article made me think that we may be a step closer to the day that we can talk to our computers and get information that we want as if we are conversing with the computer. That is, Google’s goal (and arguably the goal of many other companies) of the Star Trek Computer. Siri or Google Now isn’t even close to this yet. Why? Because the problem is hard. The article linked to above reminds me of a keynote at KDD 2012 by Robin Li, Founder and Chairman of Baidu, China’s largest search engine. His talk was less about established problems in Machine Learning and more about the problems that the company faces. One example is the ability to do voice recognition across the various dialects of Chinese and in the presence of background noise.

Reading this recent Tech Crunch article made me think that we may be a step closer to the day that we can talk to our computers and get information that we want as if we are conversing with the computer. That is, Google’s goal (and arguably the goal of many other companies) of the Star Trek Computer. Siri or Google Now isn’t even close to this yet. Why? Because the problem is hard. The article linked to above reminds me of a keynote at KDD 2012 by Robin Li, Founder and Chairman of Baidu, China’s largest search engine. His talk was less about established problems in Machine Learning and more about the problems that the company faces. One example is the ability to do voice recognition across the various dialects of Chinese and in the presence of background noise.

In any case, I think the voice-interacting Star Trek computer is one of the largest unsolved problems in Tech. I look forward to the day that I can be driving in my car or walking the dog and say, “Computer, inform me about the history of public education in the United States …”. In a future post, I may compile a list of specific technical issues that have prevented us from this goal along with areas of research containing valiant attempts to solve parts of this problem. I welcome emails and comments from AI/Machine Learning buffs with pointers :).

Open Doors with Google Hangouts

In an earlier post, I mentioned that active forms of communication like video chatting and telephone conversations can be pretty unnatural when you consider family and friends just ‘hanging out’ at home together, for instance. I also mentioned that maybe video chat can be treated in a more passive manner to encourage a more passive, natural chatting style.

In an earlier post, I mentioned that active forms of communication like video chatting and telephone conversations can be pretty unnatural when you consider family and friends just ‘hanging out’ at home together, for instance. I also mentioned that maybe video chat can be treated in a more passive manner to encourage a more passive, natural chatting style.

Typically, video chat invitations need to be handled immediately: you either have to accept or reject the invitation to chat right away. One of my previous roommates and I had a neat system: the degree to which our doors were open indicated our openness to a ‘spur of the moment’ of chat.

Ideally, a similar approach could work for a video chat where the request to chat doesn’t require an immediate response. You ‘open your door’ to other people and they will be informed. They can drop by so long as your door is open.

A nice Google Calendar feature allows you to create an event (you could even call it ‘Door’s open’) and everyone who is listed on the event can follow a hyperlink to a Google+ Hangouts video chat. It’s a subtle difference from the traditional video chat invite: instead of querying a friend and waiting for a response, you just send a link to them (e.g. via an email or text message). They can join if they want. Or not. No big deal. I think this is a more concrete suggestion for how to use tech to improve our communication with close family and friends than the high level thoughts I posted before. I’ll be trying it out and posting my ‘results’. Could be nice to streamline the process with an app, but this ‘hack’ allows me to try it out first!

Initial Thoughts on ‘The Hobbit: The Desolation of Smaug’

Just saw this movie. I really enjoyed the adventure, action, and suspense throughout it, and would recommend watching it in the theaters. After I saw the movie, I looked up reviews on it and agree with many others that Smaug was an awesome dragon to experience. Also, I like that it helps build up to the story told in Lord of the Rings and branches beyond the fun tale that I remember reading as a child.

Just saw this movie. I really enjoyed the adventure, action, and suspense throughout it, and would recommend watching it in the theaters. After I saw the movie, I looked up reviews on it and agree with many others that Smaug was an awesome dragon to experience. Also, I like that it helps build up to the story told in Lord of the Rings and branches beyond the fun tale that I remember reading as a child.

Normally I don’t write about my thoughts after a movie, but for some strange reason, I feel like I have to after this one.

Smaug and great action/adventure don’t make a great story or trilogy. I was more touched by the relationship between Bruce Willis and Ben Affleck in Armageddon than any pair of characters so far in The Hobbit, maybe with the exception of Bilbo and Gollum in the first Hobbit. The Lord of the Rings (LOTR) movie trilogy is a masterpiece: it explored the a number of characters, pairs of characters, triples, etc. and their development together (of course way better than Armageddon). Action and adventure are great, but with just those two, I feel empty without character development. I’m surprised so many reviews focus on the adventure and action as opposed to character development, especially since it is natural to compare The Hobbit to LOTR. I also don’t agree that this sequel is such a ‘leap’ above the first movie. To me, they’re both about the same when it comes to what matters: the story.

As it stands, the series so far is ‘good’. It could have been ‘great’ by exploring a few characters in more depth (Thorin in particular). Since The Hobbit is such a short book, the creators of this movie series have a unique opportunity to contribute to the development of these characters by expanding a short book into three movies. They certainly have in many respects, but I argue that they should have focused on character development more. I don’t mind a few poorly done special effects if what results is a better story with characters that I can relate to.

Automating Organization and ‘Sticky Items’

I had a nice in-person discussion after the last post. The basic idea is that there is an overwhelming amount of manual effort that goes into organizing a hierarchy. I think this is a really good point, and I really like the idea of ‘automating’ the organization process or algorithms providing suggested or ‘default’ organizations. This being said, I also like the idea of user input in the process. Even if you have a nice automated way to organize your data, what if you really want to keep something organized the way it is? This approach would allow you to access your data in a way that you specify. For example, in a snapshot of my Google Play music library above, what if I wanted the three items in blue ‘stuck’ or ‘pinned’ at those locations? That would be preferable to typing in a search bar or scrolling through a list of albums automatically sorted by some criteria. This hybrid approach allows user interfaces to provide automated suggestions for organization while at the same time letting the user keep certain things where they want them. Another example is phone apps. Right now we manually organize our apps on our home screen(s), but what if apps are organized, at least in part, by how often they are used while certain commonly used apps are ‘sticky’ because you like them there?

I had a nice in-person discussion after the last post. The basic idea is that there is an overwhelming amount of manual effort that goes into organizing a hierarchy. I think this is a really good point, and I really like the idea of ‘automating’ the organization process or algorithms providing suggested or ‘default’ organizations. This being said, I also like the idea of user input in the process. Even if you have a nice automated way to organize your data, what if you really want to keep something organized the way it is? This approach would allow you to access your data in a way that you specify. For example, in a snapshot of my Google Play music library above, what if I wanted the three items in blue ‘stuck’ or ‘pinned’ at those locations? That would be preferable to typing in a search bar or scrolling through a list of albums automatically sorted by some criteria. This hybrid approach allows user interfaces to provide automated suggestions for organization while at the same time letting the user keep certain things where they want them. Another example is phone apps. Right now we manually organize our apps on our home screen(s), but what if apps are organized, at least in part, by how often they are used while certain commonly used apps are ‘sticky’ because you like them there?